DeepSeek local deployment tutorial, even beginners can do it!

Installation environment

Operating system: Windows 10 and above

Preparation tools:

- 轻量级模型调用工具ollam,调用I模型为Deepsik-R1

- AI 模型管理工具Cherry-Studio或AnythingLLM(二选一)

Resource Links:

https://pan.baidu.com/s/1RVWsJPRdPubjB9ppBTh4Bg

提取码:xyj1- 1.

- 2.

Installation Steps

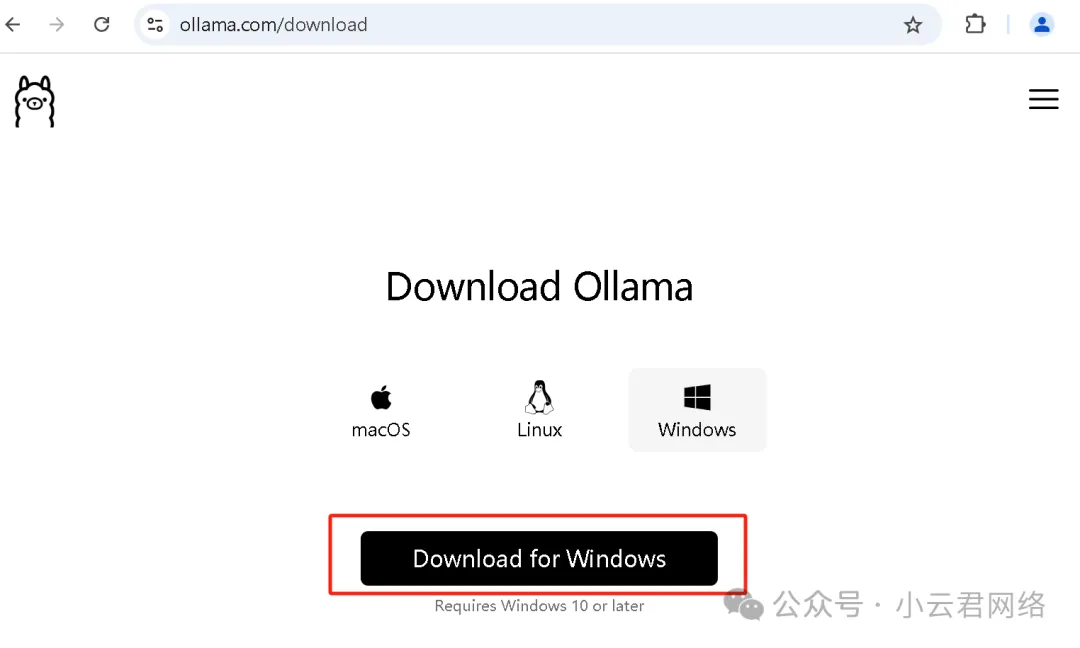

Step 1: Download and install Ollama and modify the environment variables

To run large models, you first need a good tool, Ollama is recommended, it is a lightweight tool that supports quickly installing and running large language models (such as deepseek, Llama, Qwen, etc.).

ollama download address: official website link https://ollama.com/download/ or my network disk (at the beginning of the article)

Select the appropriate operating system to download, and the default installation is complete. Note here that do not enable ollama after the installation is completed, because it will download the model to the C drive by default, and models such as deepseek and Qwen will occupy the C disk space at least several GB, so you need to set the environment variables of ollama first.

How to set environment variables:

(1) Search for "environment variables" by menu key.

(2) Follow the steps below to set the environment variables:

OLLAMA_HOST: 0.0.0.0

OLLAMA_MODELS:D:\Ollama model- 1.

- 2.

- OLLAMA_HOST: Set to 0.0.0.0 to apply ollama services to all networks, default ollama is only bound to 127.0.0.1 and localhost;

- OLLAMA_MODELS_PATH: The storage path of the AI model is set.

(3) After installing ollama and setting the environment variables, CMD opens the command window and enters the following command to view the version:

ollama -v- 1.

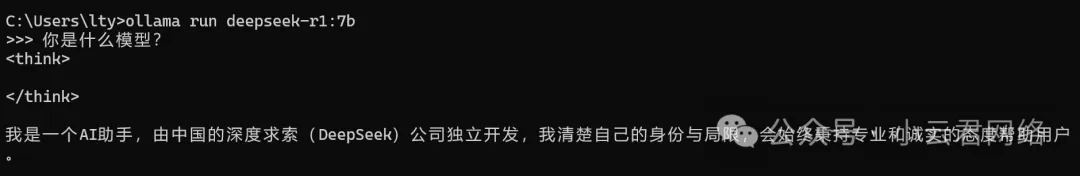

Step 2: Load the AI model (the deepseek-R1 model is used here)

(1) Open the official website of ollama https://ollama.com/search you can find the AI large model deepseek-r1, because it is popular, it is the first one

After entering, you can select the specifications according to the configuration of your computer, and then copy the corresponding commands later

To illustrate: only 671B is a large model of full-blooded deepseek, and the others are small models of distillation. In addition, according to your own computer configuration, the specifications are selected as follows (if the configuration is too low, you will not be able to run the high-specification model)

model | Video memory requirements | Memory requirements | Graphics card is recommended | Cost-effective solution |

7B | 10-12GB | 16GB | RTX 3060 | Used 2060S |

14B | 20-24GB | 32GB | RTX 3090 | Dual SIM 2080Ti |

32B | 40-48GB | 64GB | RTX 4090 | Lease a cloud server |

(2) Run the copied command in the CMD command window to complete the AI model download (due to the modification of the environment variables, the storage path is no longer the C drive):

ollama run deepseek-r1:7b- 1.

Then it's time to ask the AI:

Press Ctrl+Z to go back to the upper level and enter the following command to view the list of downloaded models:

ollama list- 1.

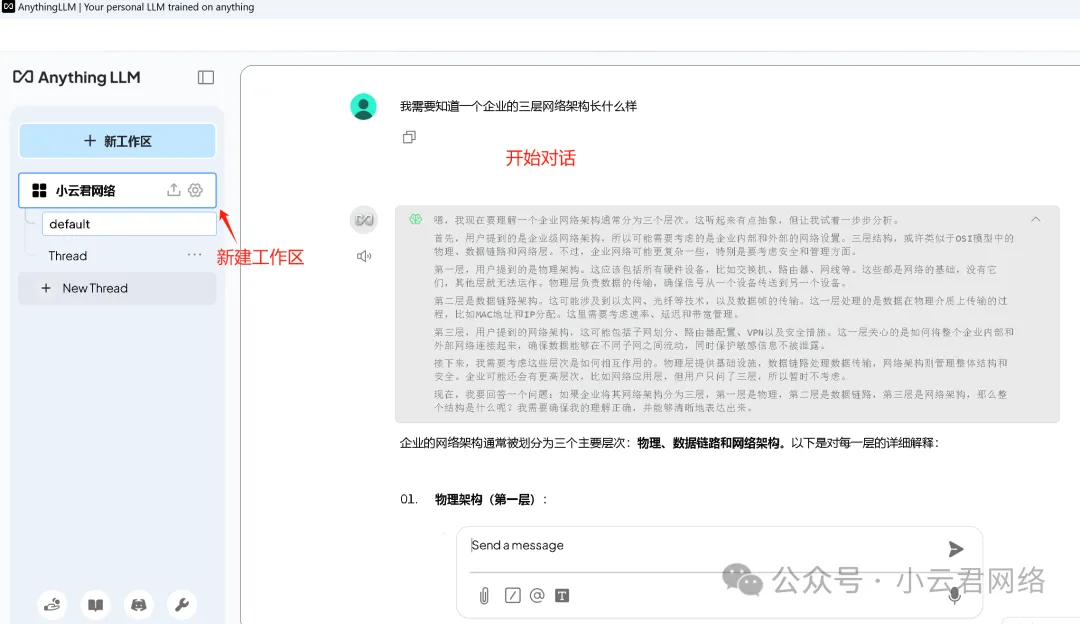

In order to communicate with AI more beautifully and visually, the following needs to be achieved through interactive tools, which can be done with cherry-studio or anythingLLM. This topic describes how to deploy anythingLLM.

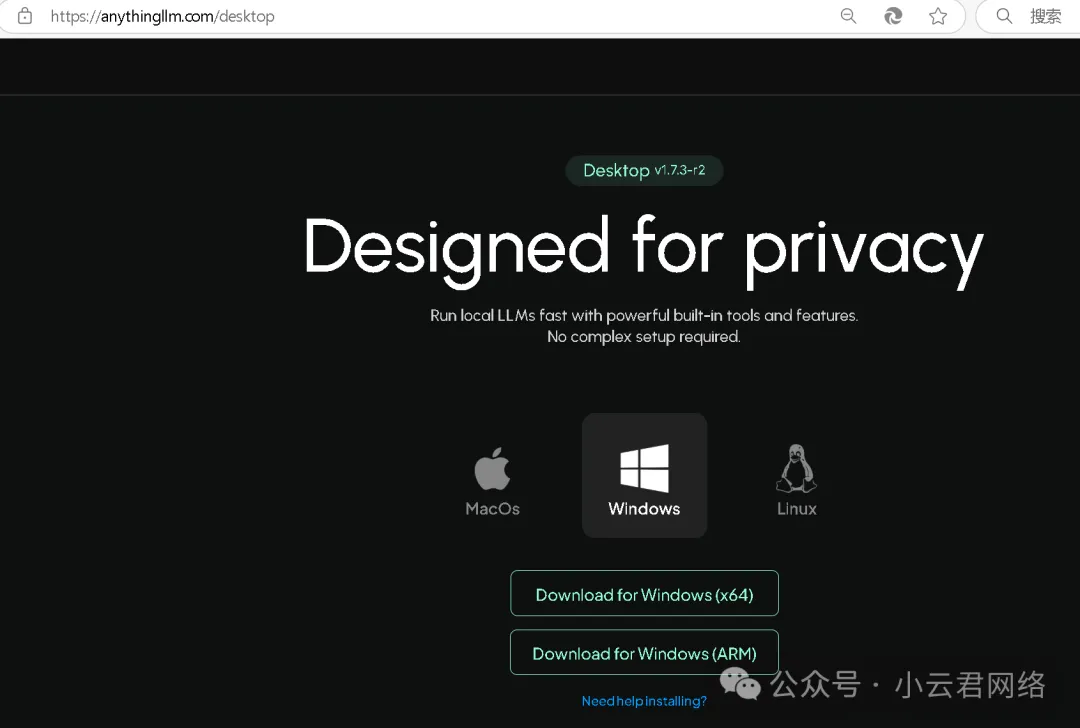

第三步、安装Anything并通过Ollama加载Theepsik模型

(1) Download address: official website link https://anythingllm.com/desktop or my network disk (at the beginning of the article)

(2) After completing the installation, open AnythingLLM and click "Settings" to modify the variable parameters:

Once you've set it up, create your own workspace so you can visualize page conversations: